23 July 2025 — By Trish Thomas

Introducing our conversational search agent

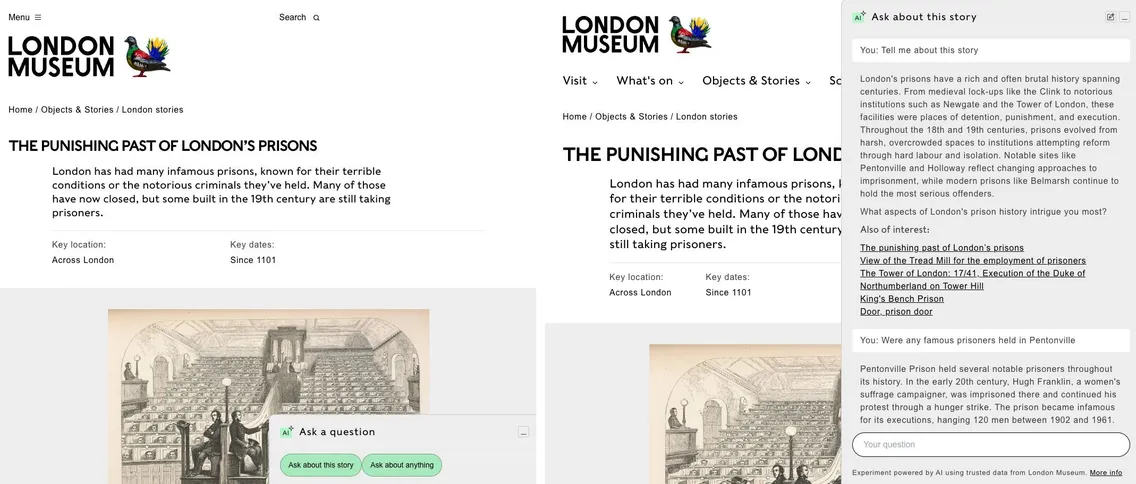

Welcome to Clio 1.0, our new conversational search agent. It’s here to help you explore the museum’s collections and stories more intuitively, using natural language so it's like having a chat with a helpful guide.

So, what's it all about?

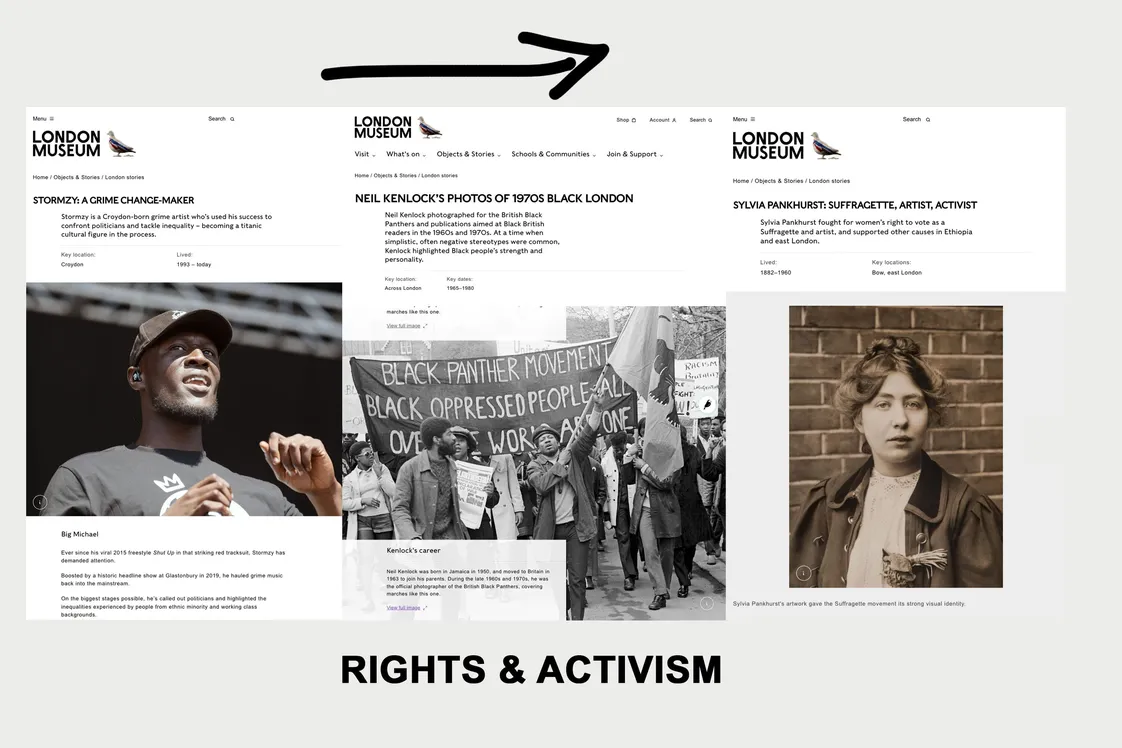

This is an experiment to see how audiences might engage differently with our content and collections.

The search is powered by artificial intelligence (AI) and uses only London Museum’s trusted data to answer your questions. This means that if there's other information relating to your query somewhere else online that isn't included in London Museum's data, it won’t be reflected in our answers.

By drawing from our multiple sources, it can give you more rounded answers to your questions instead of signposting you to a list of web pages like a conventional search function does.

Below the chat answer you'll notice that it also signposts to some related pages you might want to explore.

“The chat pop-up appears at the bottom right of all our collections object pages, London stories and blogs”

How to get the most from it

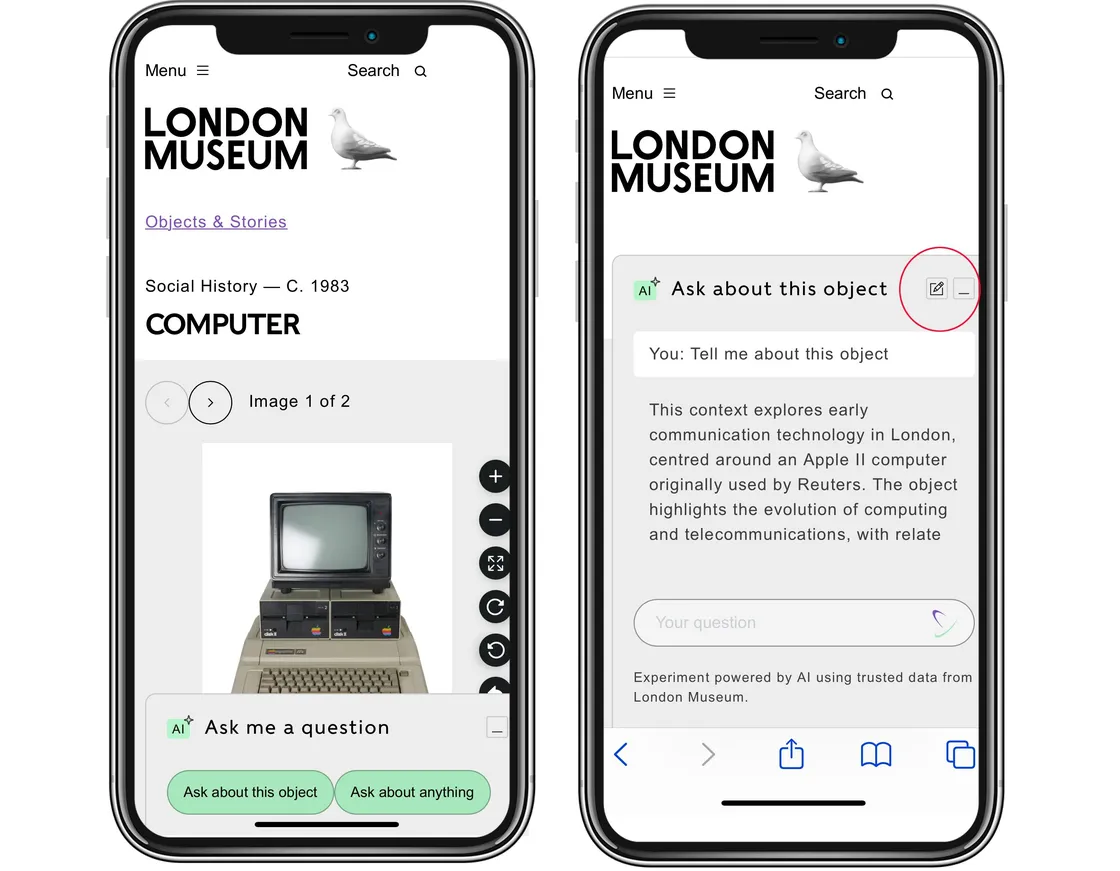

The chat pop-up appears at the bottom right of all our collections object pages, London stories and blogs.

You can choose to ask Clio 1.0 questions about the page you are on at the time or you can ask more widely about our collections, stories and blogs as a whole. You can ask your questions in natural language, just like you might speak, for example:

- "do you have any Roman jewellery?"

- "do you have any objects from Lewisham?"

- "do you have any paintings of the Thames?"

- "how many people died in the Great Fire of London?"

- "were there any male Suffragettes?"

It’s OK to use complete questions or short phrases, as the search understands both. If you don’t get the answer you expected, try tweaking the wording and asking again. It helps to be specific, but not too technical. For example, try “paintings of animals” instead of “faunal oil representations.”

If you want your answer to include links, you might need to specify that in your question, eg "do you have any paintings of the Thames? Include links".

You might also notice that Clio 1.0 gives you different variations on the answer to the same question if you ask it multiple times. This is because it’s always using the context of what you previously asked and how you asked it.

You can always choose the chat refresh button if you want to clear your history. This will also refresh the 'also of interest' links.

You can use the chat refresh button to clear your history.

When is it better to use the conventional collections search or site search?

It's better to use our collections or site search if:

- you're looking for a specific object using its unique ID number, the collections search will take you to that specific object page

- you want to narrow results to your question down, for example by date range or object material, you can better do this using the collections search filters

- you're asking a question about how many objects of a certain type we hold in our collection – in this case, using the collections keyword search and filters will also be your best option

- you're looking for information about our policies – the nature of conversational search is to paraphrase, so if you’re looking for the museum’s position about a specific issue, it’s better to use our site search and refer to the specific relevant documents to find answers

“It uses only London Museum’s trusted data to answer your questions”

Are there limitations?

Yes absolutely, but we think the pros outweigh the cons! Some of the limitations we're aware of:

- Clio 1.0 uses only London Museum’s trusted data to answer your questions, which means that if other information exists somewhere online outside our data, but relates to your search query, it won’t be included in our chat answers – ensuring the answers you get are always credible

- conversational search tools often summarise or interpret collection data using natural language, which means that dates, titles or materials might be simplified or rounded

- while you can trust that London Museum’s collections data is credible, it may be incomplete in some cases, because the chat will only be able to give you an answer if it can find the data it needs

- we’re training Clio 1.0 to use appropriate language terms based on inclusive standards, but we're aware that offensive language and outdated terminology exist in our older records. As a result, it may occasionally surface terms we no longer find acceptable. Moving forwards, we aim to standardise our language, making conscious choices about the terms we will and won't use. Find out more about our acceptable language approach

- the chat isn’t trained to search in languages other than English, so although it may try to give you answers, we can't guarantee the quality

The quality of the chat will get better over time and with more usage, as AI technology improves daily and as we continue to train it based on user interaction.

What it can't do

If you ask it an existential question like: "what’s the point in museums?" then it’s likely to give you an existential answer! You can interpret this as you choose, but it won't necessarily represent the views of London Museum. The same applies if you ask it a subjective question like "what’s the best object in the collection?".

It can’t show you image results in the chat window if you asked "show me all your paintings of the Thames", for example, but it will be able to signpost you to key pages to see the images.

The reason we’ve chosen not to display images results is because we want to limit the environmental impact of the search. Displaying images in this way uses lots of data.

“It’s here to help you explore the museum’s collections more intuitively, using natural language”

Your privacy

You should avoid sharing personal or sensitive information (like your full name, address, passwords, bank details or medical records) during conversational searches. We store the anonymised chat data for up to 90 days in order to help us refine the way it works.

Am I killing the planet by using it?

The museum’s policy is to prioritise AI solutions that minimise energy consumption and environmental impact, contributing to our wider sustainability goals. We’ve opted not to include rich media such as images, audio, and video to reduce energy consumption. Currently, we use two AI services to deliver our Conversational Search:

OpenAI’s text-embedding-3-small model – a lightweight, task-specific model rather than a general-purpose language model.

Anthropic’s Claude Haiku 3.5 model – The Haikus are the smallest and fastest models in Anthropic's Claude family:

- There is less training time because we are looking at a focused dataset, therefore fewer emissions upfront

- All this means lower runtime power so reduced carbon footprint per task

To put things in perspective, one conversational AI query uses about 100–600x more energy than a traditional Google search. So if you ask 500–1000 queries, that’s about the same energy as boiling one kettle of water.

Trish Thomas is Head of Digital Innovation at London Museum.